Data is proliferating at an accelerated rate, with all the mobile and desktop apps, social media, online purchasing, and consumer loyalty programs available today. All of these data sources have not just changed the way we operate on a day-to-day basis, but it has immensely increased the volume, velocity, and variety of data being created.

Faced with this growing trend, data professionals now often have to look beyond the relational database to NoSQL database technologies to fully address their data management needs. The four categories of NoSQL databases are column-oriented, key-value, graph, and document-oriented databases, and each one is best suited to fill a specific data management niche.

Document-oriented databases, which store data in JavaScript Object Notation (JSON) documents, are commonly used to collect machine-generated data. This data is often generated rapidly and in high volume, and therefore is often difficult to manage and analyze with systems other than document-oriented databases.

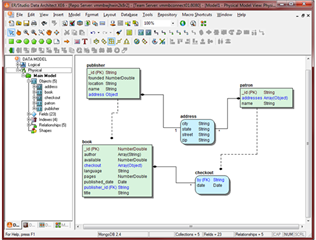

MongoDB is a specific example of a document-oriented database and, although it excels at solving the problem outlined above, its schema-less architecture can pose problems related to data quality. For instance, in a single MongoDB collection, you could technically have different fields for accountNumber, AccountNumber, and accountnumber (note the different case usage). The data quality problem arises when a report is written that requires customer account numbers, but only queries the AccountNumber field and, therefore, only receives a subset of records. To steer clear of this problem, it is recommended to spend some time modeling your data before implementing a MongoDB system.

ER/Studio Data Architect makes modeling MongoDB easier with support for native reverse engineering, plus a special modeling notation for the embedded object arrays used in MongoDB. This “is-contained-in” notation indicates the array relationship for nested objects in the model view. Additionally, Data Architect can forward engineer to blank-sample JSON, providing round-trip support. The MongoDB sources can be accessed securely through Kerberos and SSL authentication and encryption.

Other applications for big data often use Apache Hadoop as the underlying data management platform. Due to Hadoop’s schema-less architecture and its ability to horizontally scale over commodity hardware, data storage is no longer the limiting factor for performing in-depth analytics. Hadoop gives data professionals the ability to keep up with the volume, velocity, and variety of data being created. However, its schema-less architecture does not mean that data modeling can be avoided.

Since data stored in Hadoop is almost always used for analytics, and because of its immense volume and wide variety, the need for data modeling has never been greater. As an example, if a micro-targeting strategy relies on personal data captured from a campaign website, then a dimensional data model should be used to dissect the data into facts such as website visits by potential supporters and dimensions such as location and time of their visits. This is necessary information for properly analyzing the data.

ER/Studio Data Architect provides support for Hadoop Hive with native reverse-engineering and DDL generation, and is compatible with many of the major third-party implementations such as Hortonworks. The reverse engineering process generates datatypes and storage properties specific to Hadoop Hive.

Read more about ER/Studio’s big data support.